Researchers unveil automated robot visual mapping technique

Researchers at QUT have developed an automated system that improves how robots map and navigate the world by making vision-based mapping systems more adaptable to different environments.

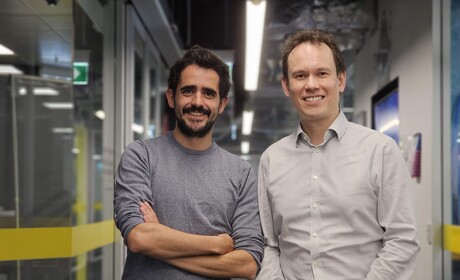

Lead researcher Dr Alejandro Fontan Villacampa from the QUT School of Electrical Engineering & Robotics said Visual SLAM is the technology that helps devices like drones, autonomous vehicles and robots navigate.

“It enables them to create a map of their surroundings and keep track of their location within that map simultaneously,” he said.

“SLAM systems traditionally rely on specific types of visual features: distinctive patterns within images used to match and map the environment. Different features work better in different conditions, so switching between them is often necessary, but this switching has been a manual and cumbersome process, requiring a lot of parameter tuning and expert knowledge.”

Fontan said QUT’s new system, AnyFeature-VSLAM, adds automation into the ORB-SLAM2 system that is widely used around the world.

“It enables a user to seamlessly switch between different visual features without laborious manual intervention,” he said. “This automation improves the system's adaptability and performance across various benchmarks and challenging environments.”

Research supervisor Professor Michael Milford, Director of the QUT Centre for Robotics, said the key innovation of AnyFeature-VSLAM was its automated tuning mechanism.

“By integrating an automated parameter tuning process, the system optimises the use of any chosen visual feature, ensuring optimal performance without manual adjustments,” he said. “Extensive experiments have shown the system’s robustness and efficiency, outperforming existing methods in many benchmark datasets.”

Fontan said the development was a promising step forward in visual SLAM technology.

“By automating this tuning process, we are not only improving performance but also making these systems more accessible and easier to deploy in real-world scenarios.”

The new development will be presented to the Robotics Science and Systems (RSS) 2024 conference in Delft, the Netherlands. Milford said the RSS conference was one of the most prestigious events in the field, attracting the world’s leading robotics researchers.

“The presentation of AnyFeature-VSLAM at RSS 2024 highlights the importance and impact of this research. The conference will provide a platform for showcasing this breakthrough to an international audience.”

“Having our research accepted for presentation at RSS 2024 is a great honour,” Milford added. “It shows the significance of our work and the potential it has to advance the field of robotics.”

The project was partially funded by an Australian Research Council Laureate Fellowship and the QUT Centre for Robotics.

ARM Hub offers NVIDIA access for Propel-AIR participants

Robotics companies are invited to access NVIDIA AI and robotics tech through the Propel-AIR...

Emerson offers solution to reduce energy costs and emissions

Energy Manager is designed to simplify electricity monitoring, tracking real-time use to identify...

New robotics and automation precinct opens in WA

The WA Government has officially opened what it says will be Australia's largest robotics and...