Improving human-to-robot handovers

For a few years now, collaborative robots have been increasingly making inroads into manufacturing spaces around the world. While safer to work with closely, there are still nevertheless much like traditional industrial robots in relation to their end effector capabilities.

While in most cases so far this would not be a problem, their application and efficacy could nevertheless be greatly improved if their interactions with human co-workers could be more natural. Improving the way the giving and taking of objects to and from humans will improve their applicability to more applications.

To that end, researchers at NVIDIA are hoping to improve these human-to-robot handovers by thinking about them as a hand grasp classification problem.

In a paper called ‘Human Grasp Classification for Reactive Human-to-Robot Handovers’, researchers at NVIDIA’s Seattle AI Robotics Research Lab describe a proof of concept they claim results in more fluent human-to-robot handovers compared to previous approaches. The system classifies a human’s grasp and plans a robot’s trajectory to take the object from the human’s hand.

“At the crux of the problem addressed here is the need to develop a perception system that can accurately identify a hand and objects in a variety of poses,” the researchers said. This is not an easy task, they said, as often the hand and object are obstructed by each other.

To solve the problem, the team broke the approach into several phases. The researchers defined a set of grasps that describe the way the object is grasped by the human hand. The types of grasps were defined as ‘on-open-palm’, ‘pinch-bottom’, ‘pinch-top’, ‘pinch-side’ and ‘lifting’.

“Humans hand objects over in different ways,” the researchers said. “They can present the object on their palm or use a pinch grasp and present the object in different orientations. Our system can determine which grasp a human is using and adapt accordingly, enabling a reactive human–robot handover. If the human hand isn’t holding anything, it could be either waiting for the robot to hand over an object or just doing nothing specific.

Then they created a dataset of 151,551 images that covered eight subjects with various hand shapes and hand poses using an Azure Kinect RGBD camera. “Specifically, we show an example image of a hand grasp to the subject, and record the subject performing similar poses from 20 to 60 seconds. The whole sequence of images are therefore labelled as the corresponding human grasp category. During the recording, the subject can move his/her body and hand to different position to diversify the camera viewpoints. We record both left and right hands for each subject.”

Finally, the robot adjusts its orientation and trajectory based on the human grasp. The handover task is modelled as a Robust Logical-Dynamical System. This is an existing approach that generates motion plans that avoid contact between the gripper and the human hand given the human grasp classification. The system was trained using one NVIDIA TITAN X GPU with CUDA 10.2 and the PyTorch framework. The testing was done with one NVIDIA RTX 2080 Ti GPU.

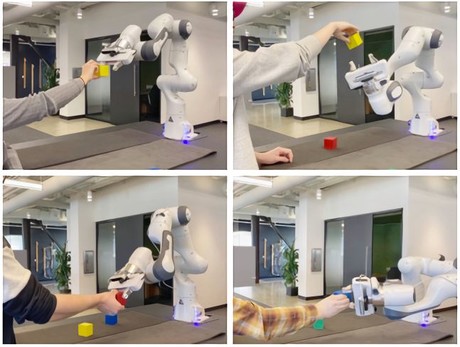

To test their system, the researchers used two Franka Emika cobot arms to take coloured blocks from a human’s grasp. The robot arms were set up on identical tables in different locations. According to the researchers, the system had a grasp success of 100% and a planning success rate of 64.3%. It took 17.34 seconds to plan and execute actions versus the 20.93 seconds of a comparable system.

However, the researchers admit a limitation of their approach is it applies only to a single set of grasp types. In the future, the system will learn different grasp types from data instead of using manually specified rules.

“In general, our definition of human grasps covers 77% of the user grasps even before they know the ways of grasps defined in our system,” the researchers wrote. “While our system can deal with most of the unseen human grasps, they tend to lead to higher uncertainty and sometimes would cause the robot to back off and replan… This suggests directions for future research; ideally we would be able to handle a wider range of grasps that a human might want to use.”

China to invest 1 trillion yuan in robotics and high-tech industries

China's National People's Congress has announced a venture capital fund to expand...

Schneider Electric signs Motion Solutions as ANZ cobot distributor

Motion Solutions Australia and Motion Solutions New Zealand have been appointed as Schneider...

Top 5 robotics trends for 2025

The International Federation of Robotics has identified five key trends in robotics for 2025.